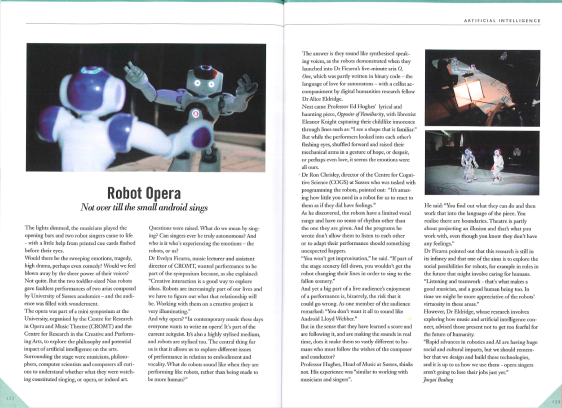

Robert Gyorgyi, a Music student here at Sussex, recently interviewed me for his dissertation on robot opera. He asked me about my recent collaborations, in which I programmed Nao robots to perform in operas composed for them. Below is the transcript.

Interview with Dr Ron Chrisley, 20 April 2018, 12:00, University of Sussex

Bold text: Interviewer (Robert Gyorgyi), [R]: Dr Ron Chrisley

NB: The names ‘Ed’ and ‘Evelyn’ often come up within the interview. ‘Ed’ refers to Ed Hughes, the composer of Opposite of Familiarity (2017) and Evelyn to ‘Evelyn Ficarra’, composer of O, One (2017)

How did you hear about the project? Was it a sort of group brainstorming or was the idea proposed to you?

[R] -Evelyn approached me, then we had a meeting when she explained her vision to me.

These NAO robots are social robots designed to speak, not to sing. Was the assignment of their new task your main challenge? How did you do that? Continue reading

Next week Sussex will host the

Next week Sussex will host the