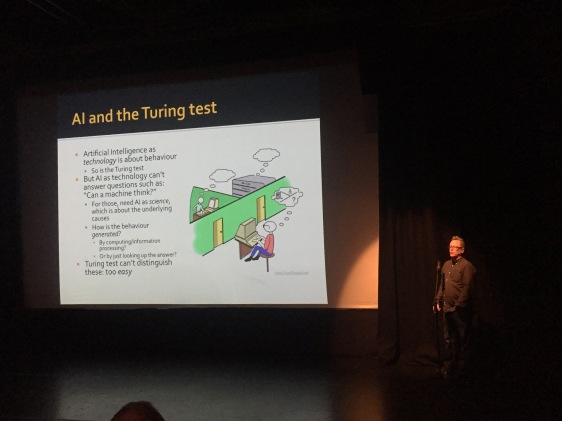

Despite what you may have heard, philosophy at its best consists in rigorous thinking about important issues, and careful examination of the concepts we use to think about those issues. Sometimes this analysis is achieved through considering potential exotic instances of an otherwise everyday concept, and considering whether the concept does indeed apply to that novel case — and if so, how.

In this respect, artificial intelligence (AI), of the actual or sci-fi/thought experiment variety, has given philosophers a lot to chew on, providing a wide range of detailed, fascinating instances to challenge some of our most dearly-held concepts: not just “intelligence”, “mind”, and “knowledge”, but also “responsibility”, “emotion”, “consciousness”, and, ultimately, “human”.

But it’s a two-way street: Philosophy has a lot to offer AI too.

Examining these concepts allows the philosopher to notice inconsistency, inadequacy or incoherence in our thinking about mind, and the undesirable effects this can have on AI design. Once the conceptual malady is diagnosed, the philosopher and AI designer can work together (they are sometimes the same person) to recommend revisions to our thinking and designs that remove the conceptual roadblocks to better performance.

This symbiosis is most clearly observed in the case of artificial general intelligence (AGI), the attempt to produce an artificial agent that is, like humans, capable of behaving intelligently in an unbounded number of domains and contexts

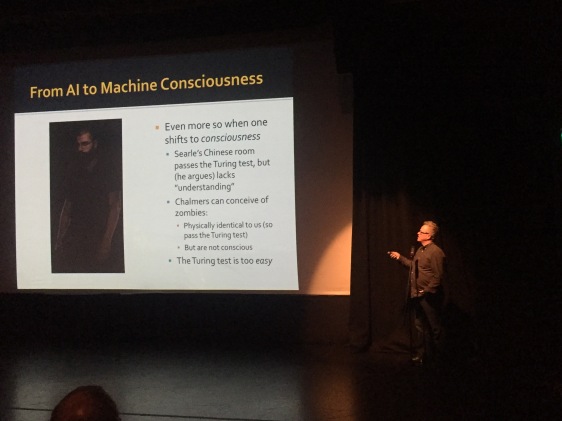

The clearest example of the requirement of philosophical expertise when doing AGI concerns machine consciousness and machine ethics: at what point does an AGI’s claim to mentality become real enough that we incur moral obligations toward it? Is it at the same time as, or before, it reaches the point at which we would say it is conscious? At when it has moral obligations of its own? And is it moral for us to get to the point where we have moral obligations to machines? Should that even be AI’s goal?

These are important questions, and it is good that they are being discussed more even though the possibilities they consider aren’t really on the horizon.

Less well-known is that philosophical sub-disciplines other than ethics have been, and will continue to be, crucial to progress in AGI.

It’s not just the philosophers that say so; Quantum computation pioneer and Oxford physicist David Deutsch agrees: “The whole problem of developing AGIs is a matter of philosophy, not computer science or neurophysiology”. That “not” might overstate things a bit (I would soften it to “not only”), but it’s clear that Deutch’s vision of philosophy’s role in AI will not be limited to being a kind of ethics panel that assesses the “real work” done by others.

What’s more, philosophy’s relevance doesn’t just kick in once one starts working on AGI — which substantially increases its market share. It’s an understatement to say that AGI is a subset of AI in general. Nearly all, of the AI that is at work now providing relevant search results, classifying images, driving cars, and so on is not domain-independent AGI – it is technological, practical AI, that exploits the particularities of its domain, and relies on human support to augment its non-autonomy to produce a working system. But philosophical expertise can be of use even to this more practical, less Hollywood, kind of AI design.

The clearest point of connection is machine ethics.

But here the questions are not the hypothetical ones about whether a (far-future) AI has moral obligations to us, or we to it. Rather the questions will be more like this:

– How should we trace our ethical obligations to each other when the causal link between us and some undesirable outcome for another, is mediated by a highly complex information process that involves machine learning and apparently autonomous decision-making?

– Do our previous ethical intuitions about, e.g., product liability apply without modification, or do we need some new concepts to handle these novel levels of complexity and (at least apparent) technological autonomy?

As with AGI, the connection between philosophy and technological, practical AI is not limited to ethics. For example, different philosophical conceptions of what it is to be intelligent suggest different kinds of designs for driverless cars. Is intelligence a disembodied ability to process symbols? Is it merely an ability to behave appropriately? Or is it, at least in part, a skill or capacity to anticipate how one’s embodied sensations will be transformed by the actions one takes?

Contemporary, sometimes technical, philosophical theories of cognition are a good place to start when considering what way of conceptualising the problem and solution will be best for a given AI system, especially in the case of design that has to be truly ground breaking to be competitive.

Of course, it’s not all sweetness and light. It is true that there has been some philosophical work that has obfuscated the issues around AI, thereby unnecessarily hindering progress. So, to my recommendation that philosophy play a key role in artificial intelligence, terms and conditions apply. But don’t they always?

Next week Sussex will host the

Next week Sussex will host the

The final E-Intentionality seminar of 2016 will be led by Simon Bowes this Thursday, December 15th at 13:00 in Freeman G22.

The final E-Intentionality seminar of 2016 will be led by Simon Bowes this Thursday, December 15th at 13:00 in Freeman G22. sday, December 1st, at 13:00 in Freeman

sday, December 1st, at 13:00 in Freeman