The proceedings of EUCognition 2016 in Vienna, co-edited by myself, Vincent Müller, Yulia Sandamirskaya and Markus Vincze, have just been published online (free access): CEUR-WS.org/Vol-1855.

The proceedings of EUCognition 2016 in Vienna, co-edited by myself, Vincent Müller, Yulia Sandamirskaya and Markus Vincze, have just been published online (free access): CEUR-WS.org/Vol-1855.

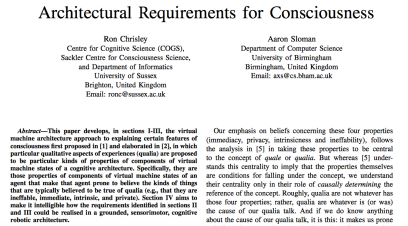

In it is a joint paper by Aaron Sloman and myself, entitled “Architectural Requirements for Consciousness“. Here is the abstract:

This paper develops, in sections I-III, the virtual machine architecture approach to explaining certain features of consciousness first proposed in [1] and elaborated in [2], in which particular qualitative aspects of experiences (qualia) are proposed to be particular kinds of properties of components of virtual machine states of a cognitive architecture. Specifically, they are those properties of components of virtual machine states of an agent that make that agent prone to believe the kinds of things that are typically believed to be true of qualia (e.g., that they are ineffable, immediate, intrinsic, and private). Section IV aims to make it intelligible how the requirements identified in sections II and III could be realised in a grounded, sensorimotor, cognitive robotic architecture.

sday, December 1st, at 13:00 in Freeman

sday, December 1st, at 13:00 in Freeman